OneSignal has been using Rust extensively in production since 2016, and a lot has changed in the last four years – both in the wider Rust ecosystem and at OneSignal.

At OneSignal, we use Rust to write several business-critical applications. Our main delivery pipeline is a Rust application called OnePush. We also have several Rust-based Kafka consumers that are used for asynchronous processing of analytics data from our on-device/in-browser SDKs.

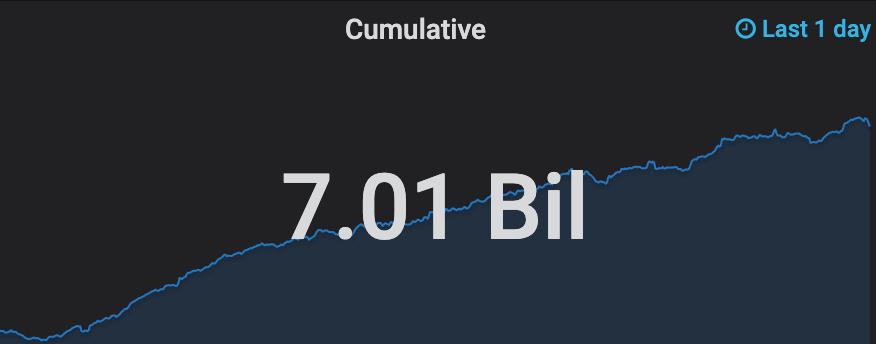

Performance Numbers ⚡

Since the last blog post about OnePush, the volume of notifications delivered has increased dramatically. When we published that blog post back in 2016, we were delivering 2 billion notifications per week, and hit a record of 125,000 deliveries per second.

Just this month, we crossed the threshold of sending 7 Billion notifications per day, and hit a record of 1.75 million deliveries per second.

That's 24x the overall rate, and 14x the maximum burst rate! We credit this huge expansion to the performance gains won from our use of Rust.

To mark these throughput records, we're looking back at how Rust has transformed the way we deliver notifications here at OneSignal.

What's changed 🔀

OnePush has been in production at OneSignal since January 2016. Back then, the latest stable release was Rust 1.5 (we’re now on 1.44!).

Rust has seen a lot of changes, libraries, and new features since then. Here are some of the highlights:

try!macro deprecated in favor of?- Rust 2018 edition

- Scoping rules change

- Entire futures ecosystem

- futures 0.1.0 released in July 2016

- tokio 0.1.0 released in January 2017

- std::future::Future stabilized in July 2019

- async/await stabilized in November 2019

impl traitsyntaxdyn traitsyntax- Changes to the borrow system

- Non-lexical lifetimes

- MIR-based borrowck

- Error handling libraries have come into and gone out of favor

error-chainfailureanyhowthiserror

- The architecture of OnePush is largely the same, but we did make a few changes

- Replaced r2d2 with our own pooling library based on futures called l337. The bb8 crate was available but we had some concerns on running it in production at the time

- Replaced multiple thread pools with futures running on tokio executors

Our previous OnePush blog post also highlighted some pros and cons of choosing Rust. Although some of these have changed, others have stayed the same. Here's how the pros and cons compare now:

- Rust still allows us to build highly robust, performant systems without cognitive overhead on worrying about safety

- Rust is far more mature than it was in 2015, but there is still a large amount of change in the language. Steve Klabnik has a fantastic article on how often rust changes, but the library ecosystem has gone through even more evolution than the language itself.

- Rust-analyzer has far exceeded the simple auto-completion that was available with racer in 2016, we now have rich tooltips, go to definitions, error/warning/lint highlighting, and fantastic refactoring support

- The compiler has added incremental compilation since we first started using Rust, and there has been much work done on improving the compiler speed. Go still beats it in a raw compilation speed race, but Rust gets closer with every release.

- OnePush was initially using a pinned nightly version of Rust so that we could use custom derive. We were able to move to a stable version of the compiler when this was no longer feature-gated to nightly.

- The HTTP issues talked about in that blog post have largely gone away as we've adopted the std::future::Future and async/await ecosystem

- The async Redis and Postgres clients that we were waiting for have been created by the community

- redis-rs added futures support in 0.15

- tokio-postgres supports async execution of postgres queries

Rust has grown a lot from 2016 to now, but one of the most exciting ways that Rust has become more useful is in its adoption of asynchronous programming.

Futures 0.1

We initially used threadpools and channels for processing items asynchronously, but over time we heavily adopted the futures-0.1 ecosystem internally in all of our Rust applications. We wrote manual state machines that implemented Future, and we used huge chains of .and_thens and other combinators to write more procedural-like chains of future execution. This was manageable, but there were several issues with it:

- Lots of boilerplate associated with using future combinators

- Difficult to compose futures if there wasn’t a combinator for exactly your task

- All combinator futures needed to be 'static, no borrows could be shared between futures

- Complex lifetimes are difficult to manage with Rust’s closures, and using combinators ties us directly to closures.

This pattern for performing postgres queries was all around our codebases:

fn get_fields(

connection: Arc<Mutex<Connection>>,

) -> impl Future<Item = (Arc<Mutex<Connection>>, Vec<i32>), Error = Error> {

let prepared = connection

.lock()

.prepare("select field from foo where bar=$1");

prepared.and_then(|stmt| {

let fut = connection.lock().query(&stmt, &["baz"]);

fut.map(move |rows| (connection, rows.map(|m| m.get(0)).collect::<Vec<i32>>()))

})

}

This has several concrete issues:

- We need to use an

Arc<Mutex<Connection>>instead of an&mut Connection- Must use

Arcso that type will be'static - Must use

Mutexso that value can be used mutably from different future branches- tokio-postgres required

&mut selffor important methods at this time

- tokio-postgres required

- Must use

- Must either clone the

Arcor thread the connection value through every future in the function- We didn’t want to incur the penalty of constantly cloning

Arcs, so we went with the latter. - This led to some very large tuples when we had to perform multiple queries

- There was a lot of code just adding and removing values from these large result tuples

- We didn’t want to incur the penalty of constantly cloning

- Before

impl traitwas stabilized, the function signature was even worse:- We would have either needed to box the future, or explicitly call out the whole execution chain

- This either slowed down execution or made refactoring extremely costly

async/await

In November 2019, the async/await feature was stabilized along with the release of Rust 1.39, allowing futures to easily be written in a very similar manner to synchronous code. It had several key advantages over the combinator system:

- Borrows can be made without making a future non-

'static- As long as the value you're borrowing from is owned by the current task

- Removing multiple layers of nesting that were previously needed with

.and_thenand.map - The same control flow methods used in synchronous code can now be used in async code

The get_fields function, shown above written with combinators, was easily rewritten with async/await:

async fn get_fields(connection: &Connnection) -> Result<Vec<i32>, Error> {

let stmt = connection

.prepare("select field from foo where bar=$1")

.await?;

let rows = connection.query(&stmt, &["baz"]).await?;

Ok(rows.map(|m| m.get(0)).collect())

}

This version demonstrates all of the benefits of async/await over combinators:

connectioncan be a normal borrow- No deep nesting associated with multiple layers of closures

- Normal error handling (

?) can be used

This held the opportunity to remove large amounts of future-juggling code that we had in our Rust projects, and it was a very exciting prospect. We were hugely excited about async/await when it was first released, landing a PR to rewrite a portion of our tests using it over combinators the day after 1.39 was announced.

We were immediately taken by how much simpler it was to write futures using this new syntax compared with the old combinators. There were still a few signs of the newness of async/await:

- Libraries generally did not support async/await yet, as it was based on the newer, incompatable

std::future::Futuretrait, as opposed with futures-0.1- futures-01 based Futures were suffixed with

.compat()to make them work with async/await std::future::Futurebased futures were suffixed with.compat().boxed()to make them work with older executors- If a new-style future did not return a

Result, that call chain expands to.unit_error().compat().boxed() - The tokio library was not compatible with the

std::future::Futuresystem- We tried using async-std - an alternative library to tokio - as our main futures runtime, but the libraries were (at this point at least) completely incompatible, and any calls to

tokio::spawnnested deep in our codebase would fail if there wasn't a compatible tokio runtime active

- We tried using async-std - an alternative library to tokio - as our main futures runtime, but the libraries were (at this point at least) completely incompatible, and any calls to

- These issues have largely been resolved at this point but when async/await was first stabilized in November 2019, they were real concerns.

- futures-01 based Futures were suffixed with

- It's easy to overwhelm async runtimes with seemingly innocuous code

async fn service_monitor() {

loop {

if service_has_stopped {

restart_service().await;

}

}

}

async fn restart_service() {}

- Why is this a problem?

- An

asyncfunction holds the executor for as long as it's executing, only releasing it when it reaches an.awaitpoint. - Since

service_has_stoppedwill probably be false most of the time, this function will hold the executor for a very long time without yielding. - This results in the single async task using 100% (or nearly 100%) of whatever executor thread it's running on.

- This can be solved by adding a small delay to the end of each iteration of the loop that yields control away from this

async fn. - After a few performance issues in prod, we carefully avoided async functions with unconstrained loops

- Unless they contained some task yielding within them as described above

- It's common to use spin-locking on a thread like this, since a thread will be scheduled its own CPU time by the OS

- Since async/await relies on explicit context switching, the same assumptions don't apply

- An

- One of the biggest benefits of async programming - cooperative multithreading is also an issue

- Bugs are no longer segmented to their own threads, they can cause issues for other tasks expecting to share the same executor

- The example above doesn't just cause performance issues for

service_monitor, any tasks scheduled on the same executor will not be picked up as long asservice_has_stoppedis false.

- There were a few cases that resulted in some rather bad diagnostics from the compiler. They all have issues now, but some of these are still unresolved

-

Async functions generate massive types behind the scenes that can overwhelm the compiler

- This is now fixed on nightly

-

Sometimes, line numbers are not reported for compile errors in async functions

-

When you try to spawn a non-Send/Sync future, you can get massive type errors

- This error was one of the most common that our team encountered

- Combine this with the fact that values are not

Drop-ed until the end of a function, and it's very easy to have some non-Send type held across an await point somewhere - Common causes

std::sync::MutexGuardstd::cell::Cellstd::cell::RefCell

- Spot the difference between these two async fns

use std::sync::Mutex; async fn request_and_count_1(counter: Mutex<i32>) { let lock = counter.lock().unwrap(); *lock += 1; make_request().await; } async fn request_and_count_2(counter: Mutex<i32>) { { let lock = counter.lock().unwrap(); *lock += 1; } make_request().await; }request_and_count_1is notSendbecauselocklives until the end of the function- This means that it is held across the await point for

make_request

- This means that it is held across the await point for

request_and_count_2isSendbecause the explicit inner scope ensures thatlockis dropped before the await point

-

Looking Ahead

Over the last four years, there have been a lot of new features and improvements added to Rust. We think that the opportunity for improvement is still there, and there are some very exciting RFCs on the horizon:

- Generic associated types (#1598) will allow for async functions in trait implementations, along with a host of other things

- Custom test frameworks (#2318) would be a huge improvement and allow deeper integration with CI services for flaky test analysis

Both of these have been open for a while and still have lots of work before they can be merged, but they both represent huge steps forward for the language. With proc_macro_hygine landing in 1.45 next month, some of the biggest features holding people to nightly Rust are making their way to stable. This is a great sign of Rust maturing, and Rust is going to continue to be a central part of OneSignal's strategy going forward.

Interested in joining the OneSignal team? Learn more about our open positions by clicking the button below.

Join our Team!